Date: 6th through 9th of June 2013

Participating group members: Kenneth Baagøe, Morten D. Bech and Thomas Winding

Activity duration: 24 hours

Goal

Complete the development of the behaviors of the autonomous robots.

Plan

Implement the behaviors and test them one by one, then test them when they’re all running together. We might also try to add sounds to the different behaviors.

Progress

As described in an earlier entry [1] we needed five behaviors:

- Normal

- Hit by player robot

- Hunger

- Scared of player robot

- Avoid edges/other robots

We had already added some behaviors and started implementing their action() methods, which represent the actual functionality of the behaviors. Among them was the normal, hit and avoid behaviors, where the normal behavior and avoid behaviors seemed to work well.

The hit behavior

As we described in the previous entry [2], we had not decided how to implement the behavior of the autonomous robot when it was hit by the player robot. We had, however already implemented a relatively simple behavior where it would spin in place when hit. After some consideration we decided that we would stick with this behavior. The only thing that was changed in the behavior was that the time it would spin in place was extended as it, in its original incarnation, only spun for three seconds which seemed too short. In the end it ended up being “knocked out” for a total of eight seconds.

The action() method for the hit behavior

1 2 3 4 5 6 7 8 9 10 11 12 | public void action() { suppressed = false; active = true; Sound.playSample(new File("PacManDies.wav"), 100); AutAnimal.fastRotation(); AutAnimal.pilot.rotateRight(); long startTime = System.currentTimeMillis(); while (!suppressed && startTime + 8000 > System.currentTimeMillis()) Thread.yield(); AutAnimal.slowRotation(); AutAnimal.pilot.stop(); active = false; } |

The scared behavior

We described a scared behavior in a previous entry [1]. The idea stayed much the same as we imagined then.

This behavior was to rely on the infra-red sensor that we mounted on the front of the autonomous robot, as we planned on using an IR ball on the player robot so the autonomous robots could differentiate between it and other autonomous robots. The autonomous robot would then, when it detects the player robot, try to run away as fast it can.

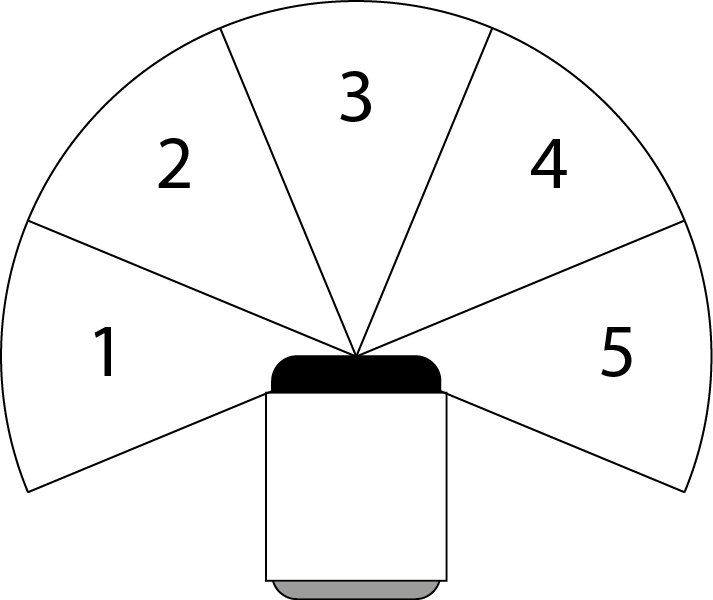

Using the IR sensor was quite reliable as the values it returned were very consistent. After further looking into the functionality of the sensor we were confused by the fact that the sensor could return five different values with getSensorValue(1), getSensorValue(2) ... getSensorValue(5), where we in the first test simply used the one that returned a value that seemed appropriate. After some experimenting we figured out that the sensor has multiple internal IR receivers, most likely placed in an inverted U-shape. This gave us the opportunity to decide how wide of a viewing angle the autonomous robots could have and after additional experimenting we decided to use receivers two, three and four, giving it a relatively wide viewing angle. Using all five receivers made it able to have an extremely wide viewing angle and be able to detect an infra-red source almost directly behind it, which we felt would make it hard to get close to it with the player robot.

We imagine the sensor has viewing angles that resemble what is shown in the figure below.

Thus our takeControl() method for the scared behavior ended up the following:

1 2 3 4 | public int takeControl() { if (ir.getSensorValue(2) > irThreshold || ir.getSensorValue(3) > irThreshold || ir.getSensorValue(4) > irThreshold) return 200; return 0; } |

The behavior we decided the robot would execute when detecting the player robot was to back off and turn away as fast as it could. It was implemented as shown below.

1 2 3 4 5 6 7 8 9 10 11 12 13 | public void action() { suppressed = false; AutAnimal.fastRotation(); AutAnimal.fastTravel(); int turn = Math.random() > 0.5 ? -1 : 1; int angle = 100 + random.nextInt(60); AutAnimal.pilot.travel(-(20+random.nextInt(30)), true); while (!suppressed && AutAnimal.pilot.isMoving()) Thread.yield(); AutAnimal.pilot.rotate(-turn * angle, true); while (!suppressed && AutAnimal.pilot.isMoving()) Thread.yield(); AutAnimal.slowRotation(); AutAnimal.slowTravel(); } |

Running this behavior by itself, it worked as we intended.

The hunger behavior

This behavior was intended to simulate a level of hunger in the autonomous robots. It would start off with the robot not feeling hunger and then slowly increase over time, at some point overtaking the normal, or idle, behavior of the robot. When this happened, the robot would start looking for food and, upon finding some food, it would eat and become satiated.

We implemented this behavior by adding an internal state on the robot keeping track of the hunger and returning this value as its motivation value up to a max of 100.

The implementation of the hunger state

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | public Hunger() { Thread t = new Thread() { public void run() { while (true) { hunger++; try { Thread.sleep(random.nextInt(1000)+3000); } catch (InterruptedException e) { e.printStackTrace(); } } } }; t.setDaemon(true); t.start(); } |

The takeControl() method of the hunger behavior

1 2 3 4 | public int takeControl() { if (hunger > 100) {return 100;} return hunger; } |

As can be seen in the thread maintaining the hunger state, the hunger increases every 3-4 seconds, we chose to increase it randomly just to add more randomness to the behavior of the robot. When the robots hunger reaches a threshold, namely when its value becomes greater than that of the normal/idle behavior, it starts moving forward at a medium pace. If the robot encounters some food it will grab it, turn a bit and move away with it. At the same time it “eats” the food, resetting the hunger to 0. The robot detects food with the color sensor mounted on the front of it.

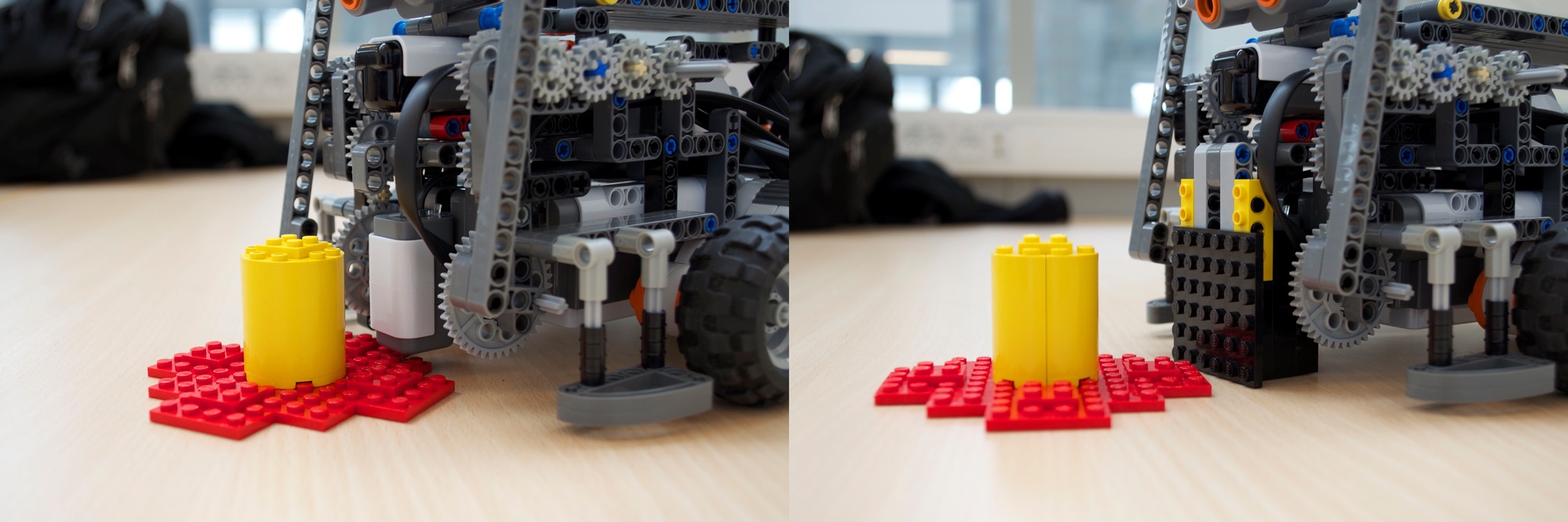

We described an idea of the food in entry 3 [2] and the basic idea stayed the same. We did, however, choose to make the food circular instead as we tried with the square and it turned out that the robot was not always able to grab the food. This happened if it came upon the food in some specific angles.

Testing

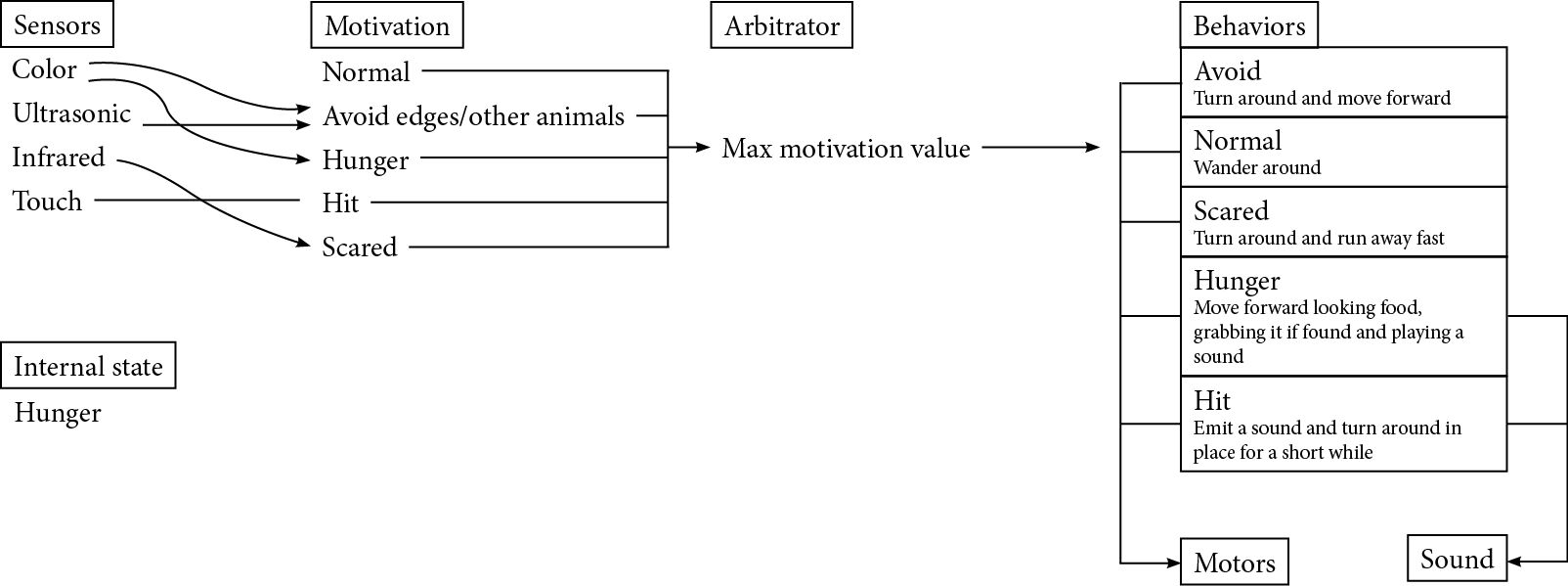

After completing the different behaviors and they seemed to work well in isolated tests, we wanted to try running the robots with all behaviors running. The different behaviors should be decided upon by the arbitrator according to the returned motivation values. Inspired by the diagram by Thiemo Krink [4] we made a similar diagram depicting the motivation network in our autonomous robots, see below.

Running the autonomous robots we quickly noted that the robots would often get stuck on the edges of the arena and that when the robot was hungry and got to the edge of the arena it would alternate between the hungry behavior and avoid behavior rapidly, meaning that it never completed either behavior.

The solution to the robots getting stuck on the edges of the arena was changing the avoid and scared behaviors. The way these behaviors worked was that they had the robot back off when it saw an obstacle/the player robot and turning. We changed these behaviors to, instead of backing up and turning, they would turn and move forward. This made the functionality of the robots much more reliable because the initial functionality of the behaviors had the robots “running blind” when executing these behaviors as they could not rely on the sensors, which were all front-mounted. Changing the behaviors to have the robot moving forwards instead of backwards enabled the robot to, once again, rely on its sensors so it did not bump into obstacles and get stuck on edges. Essentially we established that the robots should never move backwards as it would basically have no idea what it was doing.

To have the robot not rapidly switch between some behaviors that could interrupt each other we implemented an additional motivation value in some of the behaviors that would be returned when the behavior was already active.

Thus we ended up with the following motivation values:

| Behavior | Motivation value |

| Normal | 10 |

| Scared | 0, 200 (IR sensor), 105 (active) |

| Hit | 0, 300 (touch sensor), 205 (active) |

| Avoid | 0, 125 (ultrasonic/color sensor), 205 (active) |

| Hunger | 0-100 |

After implementing this functionality the problems with “competing” behaviors was solved.

Problems with the color sensor

After running the autonomous robots successfully multiple times we noticed that the robots were suddenly unable to differentiate between the light area and dark area of the arena which we used to tell when the robots were at the edge. After some experimenting we figured out that the cause of the inconsistencies in the color sensor was caused by a change in lighting in the room, it seemed that it got too light in the room when it was cloudy for the color sensor to be able to tell the light area from the dark. This meant that the robot would simply rotate in place since that was the initial part of the avoid behavior.

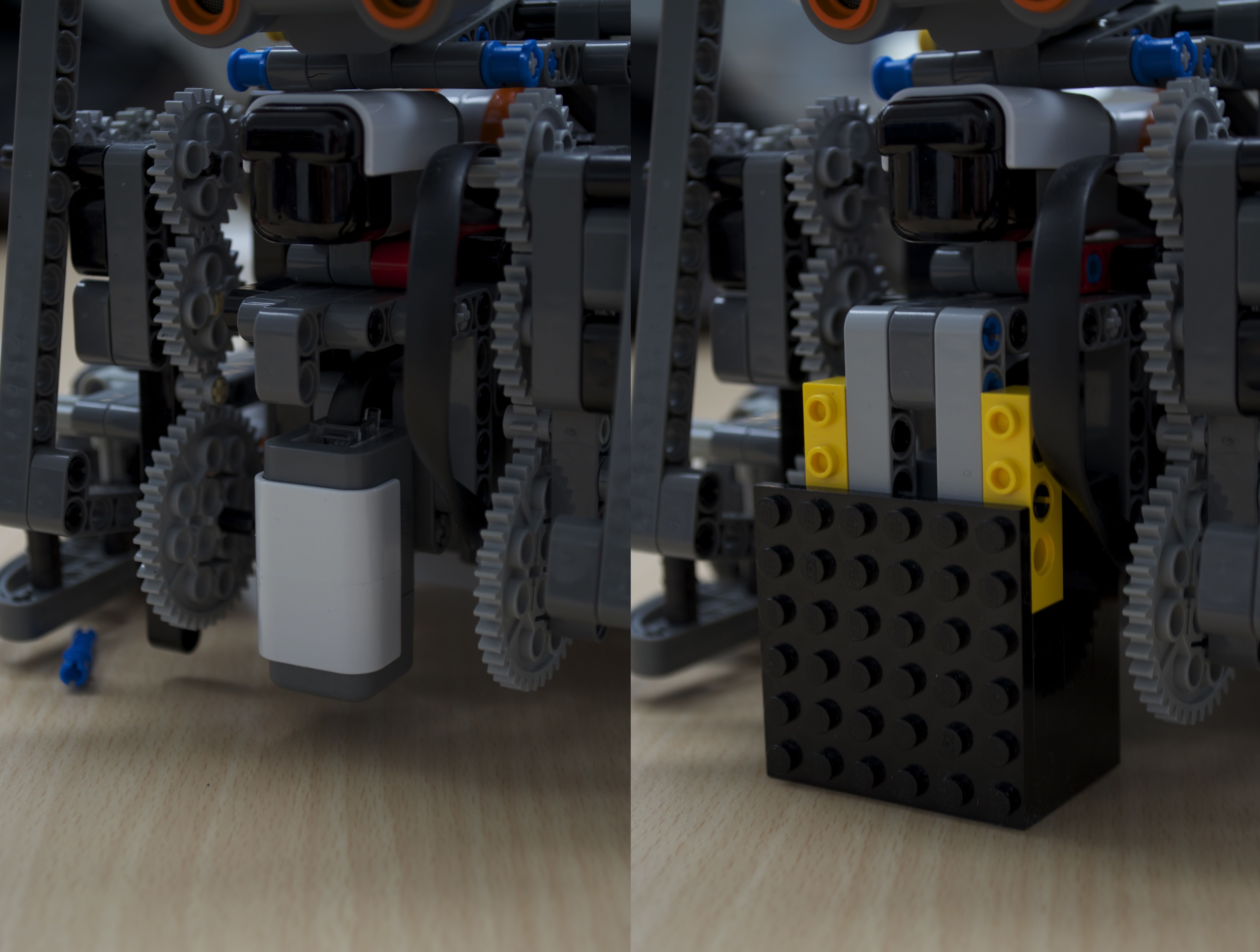

To solve this problem we mounted shielding around the color sensor which made the readings of the sensor reliable once again.

This, however, presented a new problem: The shielding on the sensor had to be very close to the ground in order to function properly. This meant that the sensor was now not able to hover above the red Lego plates that protruded from the “food”, the shielding would hit it. Thus the robot would simply push the food in front of it and never detect it when hungry.

To solve this new problem we exchanged the protruding Lego disk from the food to one made of cardboard which was thin enough that sensor could once again go over it and detect it.

Adding sound

We wanted to try adding some sound to the game to make it more entertaining. Playing sound on the NXT is very simple when using wave files as you simply use the Sound.playSample("file") method. The largest problem in this regard is the very limited Flash memory of 256KB, where the lejOS firmware seems to take up about 100KB. We tried adding two different small wave files that we found and noted that the NXT brick was only able to play one of them. The cause of this was that the NXT was only able to play 8-bit samples and one of them was, most likely, a 16-bit sample. Also worth mentioning is that the speaker on the NXT does not seem to be of a very good quality and thus good quality sound samples are probably wasted on it. We used this to our advantage, using the program Switch Sound File Converter[3], to reduce the quality, and also size, of sound samples and at the same time converting them to 8-bit samples. This gave us the chance to have multiple sound samples on each robot as the sound samples were usually in the 50-100KB range even if they were less than a second long since drastically reducing the sample rate did the same to the file size, often reducing the file to 5-10KB.

As the NXT seems to play sounds in a separate thread we also did not have to worry about it blocking the program on the robot and thus we could simply add in a sound where we wanted it without needing any major changes in the program. So far we added an eating sound when the robot finds food and a sound when the robot is hit.

Backlog

The autonomous robots are now working as we intended. We might try to find some more sounds to play on the robots when they are executing different behaviors but this will probably not be prioritized. We still need to finish construction on the remote controlled player robot which we will work on next time. The program for the remote controlled robot is mostly working, but we still need to experiment with its “weapon” and figure out how much the motor will need to rotate and whether it can properly “knock out” the autonomous robots. Additionally we might try to find some sound samples to play on the remote controlled robot.

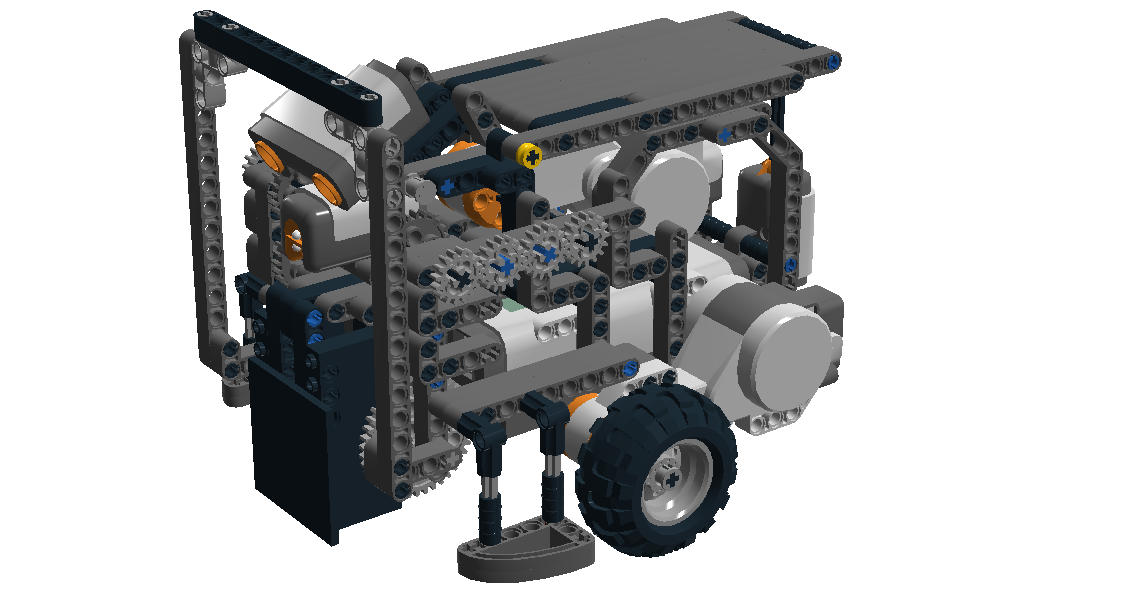

Lego Digital Designer

We have constructed a model of the autonomous robot in Lego Digital Designer, available at http://code.bech.in/digital_control/lab15/AutAnimal.lxf.

References

[1] End-course project 2, http://bech.in/?p=463

[2] End-course project 3, http://bech.in/?p=472

[3] http://www.nch.com.au/switch/index.html

[4] Thiemo Krink (in prep.), Motivation Networks – A Biological Model for Autonomous Agent Control