Date: 27th of April 2013

Participating group members: Kenneth Baagøe, Morten D. Bech and Thomas Winding

Activity duration: X hours

Goal

The goal of this lab session is to work with the tacho-counter and differentialdrive-pilot as described in the lesson plan.

Plan

Our plan is to follow the instruction and complete the task of the lesson plan.

Progress

Navigation/Blightbot

We started out by doing the test program, Blightbot, by Brian Bagnall [1]. However, as the TachoNavigator class has been removed from lejOS since, we used the Navigator class instead and provided it with an instantiation of the DifferentialPilot class as the move-controller. Controlling the Navigator class basically works in the same way as the TachoNavigator with one small exception: All methods in the Navigator class are non-blocking which means that it’s important to remember to use the waitForStop() method (or alternatively an empty while (isMoving) loop) otherwise the program will simply exit immediately.

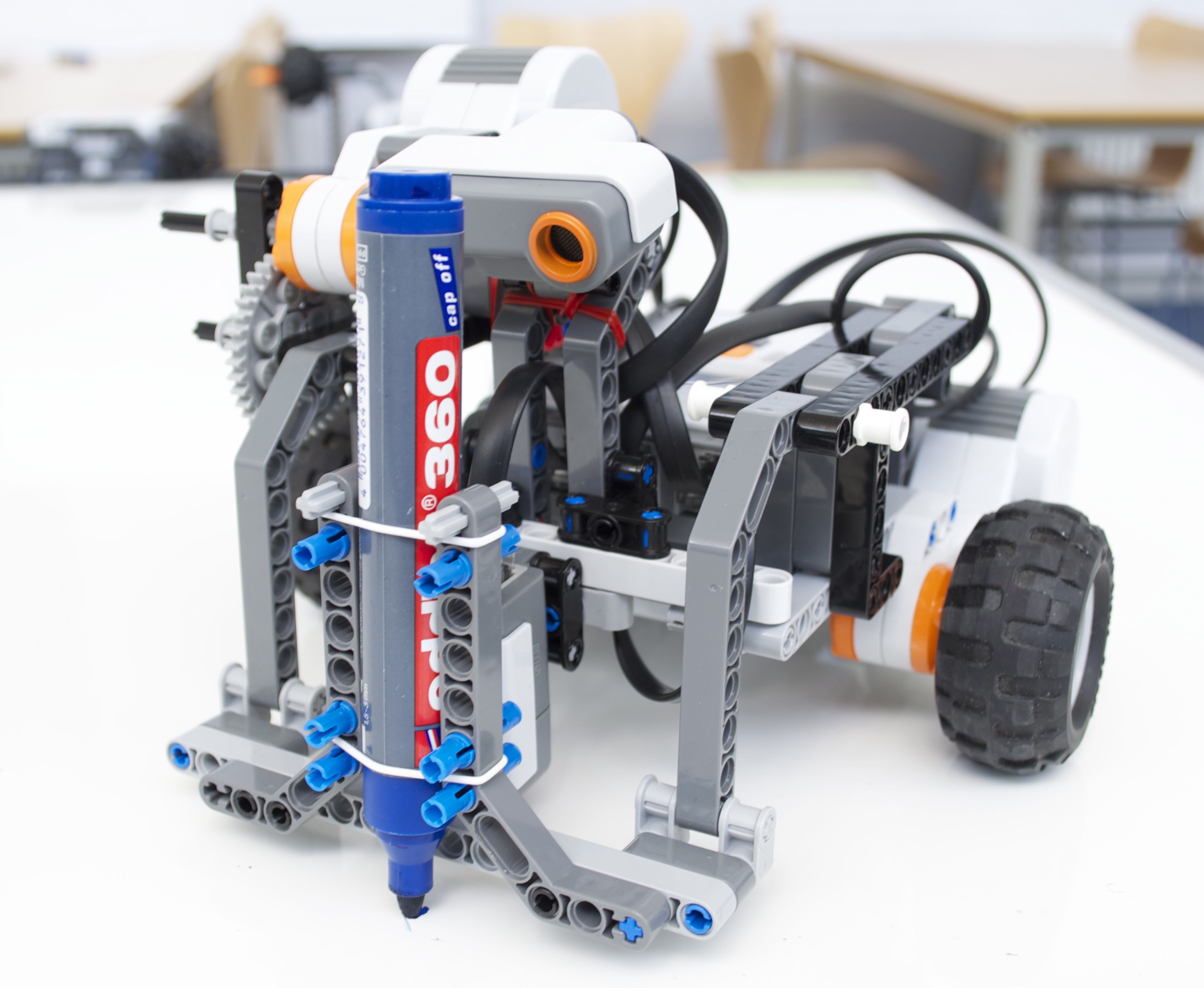

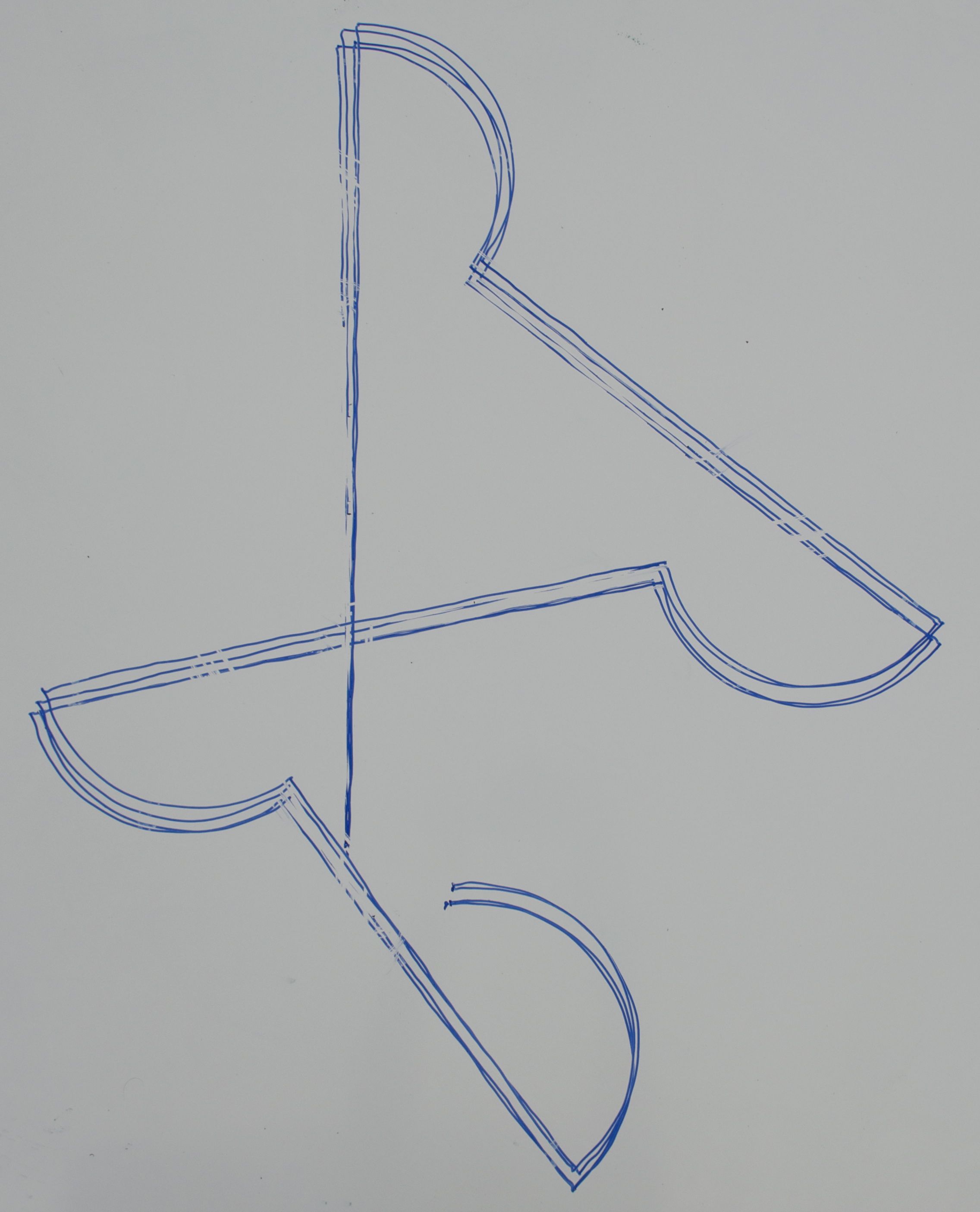

We used Maja Mataric’s low-fi approach to gathering data on the movement of robot [3] by strapping a whiteboard marker on the front of it and running it on a whiteboard. The result after three runs can be seen in the picture below.

As can be seen from the picture, the robot ends up almost at its starting point. However, the angles turned were not quite right which resulted in the deviation from the starting point. We were aware that this might happen before we began as we had made similar observations when we were making our contestant robot for the Alishan track competition in Lesson 7 [3] where we saw that a command to turn 90 degrees would be off by a degree or two. Brian Bagnall notes this as well, saying Blightbot does a good job of measuring distance but his weakness arises when he rotates. [1]

Avoiding obstacles

After finishing the Blightbot test program above we moved on to try to avoid obstacles in front of the robot when traversing the same path as was used in the Blightbot. To do this we added an ultrasonic sensor to the robot which we used to measure if anything was in front of the robot.

Inspired by an implementation of an EchoNavigator class [4], we used the RangeFeatureDetector class of lejOS which listens for objects within a given distance. When the listener finds an object within this given distance it calls the featureDetected() method which we overrode to turn a random angle either to the left or the right and then move forward a bit. After turning away from the obstacle a bit the robot would then move to the next waypoint on the path while still constantly listening for any objects in front of it.

Before using the RangeFeatureDetector class we tried to implement a method to have the robot stop whenever it saw an object in front of it. This failed as the robot would still see the object when trying to move away from the object and then basically be stuck in stopping in front of the object. The RangeFeatureDetector has the enableDetection() method which easily allows for turning the detection of objects on and off which remedied this problem.

Below is a video of the robot traversing the short path while detecting objects, or it can be watched here.

Calculating pose

In [5] the pose of the robot is calculated after every movement of some delta distance while in [6] the pose is updated every time some delta time has passed. Furthermore [6] uses a formulae for calculating the pose when moving in an arc which is different from the formulae used when moving straight, where [5] doesn’t distinguish between the two. When it comes to accuracy [5] is more accurate as long as the delta distance is smaller than the delta time for [6] makes the robot move. It should also be mentioned that the formulae used in [6] for calculating arc er more precise as they take in to account the angular velocity into account which at least in theory should be more precise, depending the delta time.

The LeJOS software of the OdometryPoseProvider-class uses [6] in the sense that it distinguish between driving straight and in arcs. Also the calculation of the new pose is triggered by calling the updatePose-method with some Move-event-object, which can either be created at a certain time or distance moved. We haven’t be able to find if it time or distance that triggers the creation of Move-event-objects. Depending on how the sampling interval it could be beneficial to decrease delta for more samples and get a more precise read-out. But it should be also be noted that depending on how you measure the distance travelled since the latest event might become a problem if the values becomes zero if i.e. it’s the tacho counter of the motors, because then the pose might not be right at all. The updatePose-method code can be seen below.

Conclusion

As we noted above, the object-avoiding robot skips to the next waypoint when it encounters an object. An interesting further development could be to have the robot try to find a way around the object and make it to the intended position before moving on to the next waypoint.

Reference

[1] Brian Bagnall, Maximum Lego NXTBuilding Robots with Java Brains, Chapter 12, Localization, p.297 – p.298.

[2], Maja J Mataric, Integration of Representation Into Goal-Driven Behavior-Based Robots, in IEEE Transactions on Robotics and Automation, 8(3), Jun 1992, 304-312.

[3] http://bech.in/?p=205

[4] http://fedora.cis.cau.edu/~pmolnar/CIS687F12/Programming-LEGO-Robots/samples/src/org/lejos/sample/echonavigator/EchoNavigator.java

[5] Java Robotics Tutorials, Enabling Your Robot to Keep Track of its Position. You could also look into Programming Your Robot to Navigate to see how an alternative to the leJOS classes could be implemented.

[6] Thomas Hellstrom, Foreward Kinematics for the Khepera Robot