Date: 28th of Februar 2013

Participating group members: Thomas Winding and Morten D. Bech, and Kenneth joined us one hours into the exercises Thursday, and everyone was there Saturday.

Activity duration

Thursday 3,5 hours

Saturday 5,0 hours

Overall goal

The overall goal for this lesson is to make a black line following robot using a light sensor and a PID control algorithm. The robot should be able to detect the color green and stop if it does.

Overall plan

To complete our goal we will follow the instruction in the lesson plan which is available here.

Black/White Detection

We would like to know what kind of values our light sensor detects for different light and dark areas, and what values different colors are measured as. As a small extra exercise we would like to see how our measurements compare to the ones we did in our first lab session.

Plan

We already the light sensor mounted on the robot from the previous weeks, which means that we simply have to do the measurements. As Ole recommended using raw values from the sensor instead of the percentage values normally measured by using the readValue() method on the sensor, we will be changing the program to do that. We will, however, do a measurement of the percentage for the comparison mentioned above.

Result

The measurements we obtained from the sensor was:

| Color | Percentages | Raw value |

|---|---|---|

| White | 57 | 431 |

| Black | 38 | 625 |

| Green | 44 | 573 |

Conclusion

The values we got this time differ slightly from the values we obtained at the first lesson, but we assume that is because that the cause of that is that we placed the light sensor at a somewhat large distance in the first lesson.

Line Follower with Calibration

The goal here is to get familiar with the program LineFollowerCal and determine how the it works.

Plan

To accomplish our goal we will at first have a look at the supplied program and try to determine what it is supposed to do. Afterwards we will upload it to our robot, run it and observe what happens.

Result

After reading the code we had a rough idea that the robot would most likely behave like a bang-bang robot. We uploaded the program to the NXT and ran it. We captured the performance of the robot in a small video which is available here or you can watch it below.

Conclusion

As we expected the robot did have the behaviour of a bang-bang robot, that is, the values for white and black are stored first, a threshold is calculated and the robot then moves forward while turning right/left depending on whether it measures a black or white value.

ColorSensor with Calibration & Line follower that stops in a Goal Zone

We want to extend the functionality of the Line Following Robot to include detecting the color green. When detecting the green color robot should stop.

Plan

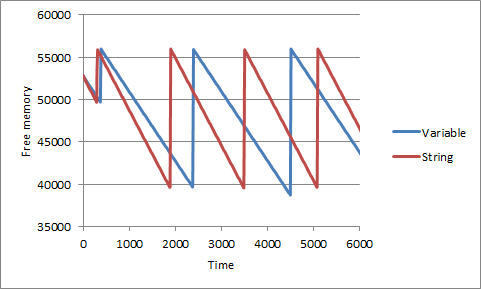

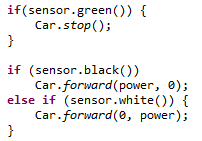

To add the new need functionality we have created a class called ColorSensor as suggested in the lesson plan . We took a lot of the functionality from the BlackWhiteSensor class into the new class and added a new variable green for calibrating the value of green. Furthermore we need to add a boolean method akin to the black() and white() for checking if the sensor sees green.

Result

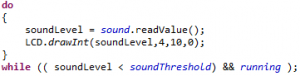

We made the needed changes to the program and found that we had to change the decision algorithm of black and white a bit. The change needed was that if the sensor sees green it can’t see white or black which is illustrated below.

A video documenting the robot in action was recorded and is available here or can be watched below.

Conclusion

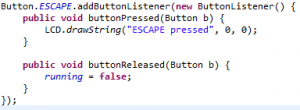

With some small modifications to the code of when we detect black and white, and letting the detection of green be an interval of 40, it worked smoothly. For the interested reader the code of the ColorSensor-class is available here. The change to the LineFollowerCal-class was to include a new else if clause in the while-loop to ask if sees green and then stop the motors.

PID Line Follower

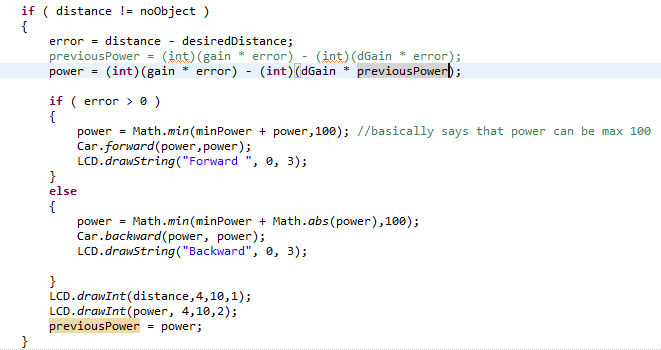

Expanding on the line follower using the light sensor, we are going to use the light sensor to write a line following program for the robot again, this time with PID regulation.

Plan

We are going to implement the parts of the PID regulation one by one, starting with the proportional control, then the integral and finally the derivative. After implementing each part, we will also run the program on the robot to make sure that it is functional.

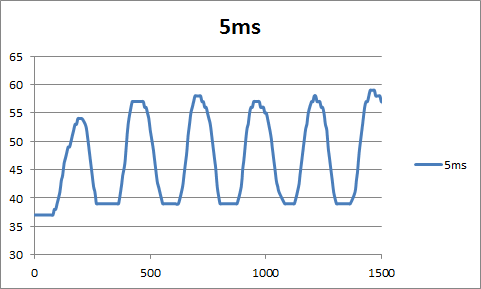

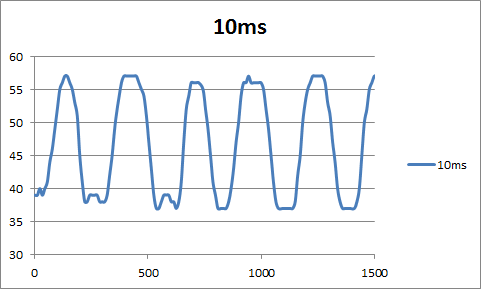

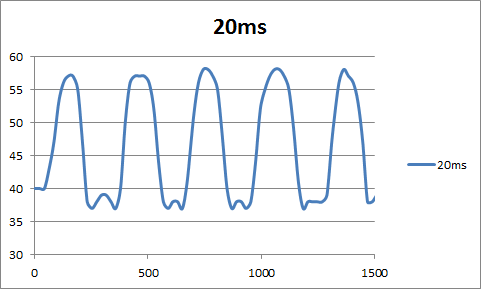

Results

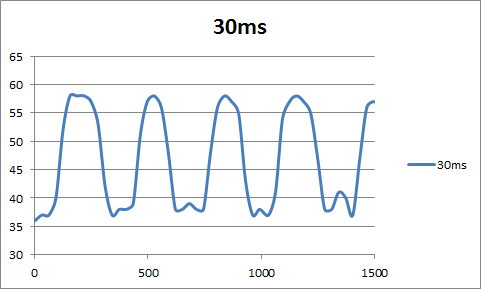

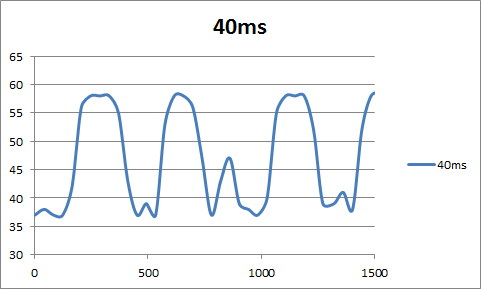

We implemented our PID Line Follower base on [1] is note upon the subject. We started with the P-term and got that to work fairly quick. However when we tried to implement the I-term the robot quiet quickly began to spin around the line, but then after implementing the D-term it stopped to spin around and instead began to follow the line. Finally we tried to tweak the constant values so that the robot would follow the line more smoothly, however after about an hour the results weren’t improving and we stop. When tweaking the constants we sometimes experienced that robot had a very hard time following the line in very sharp corners.

We made a small video clip of the robot which is available here or can be seen below.

Conclusion

As can be seen in the video clip the robot oscillates quiet a bit, and as mentioned earlier we had quiet some trouble with tweaking the constants to improve the performance of the robot.

The class code is available here.

Color sensor

The final task at hand is to construction/implement a program which using a color sensor follows a black line and stops when it sees green.

Plan

Our plan is to use a simple bang-bang algorithm with the output color from the sensor to decide the direction and speed of the robot. We are going to try smooth the curves by having five intervals or colors that we work with that is black, dark gray, gray, light gray and white. Finally if the color is green we stop the robot.

Results

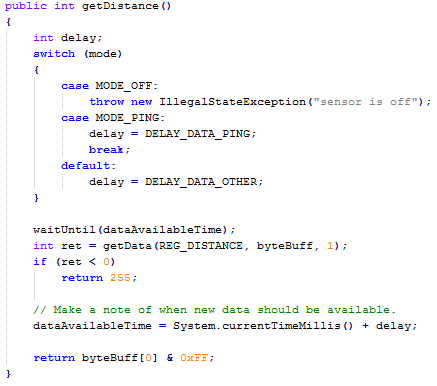

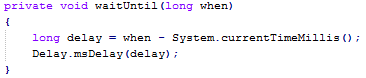

The implementation was quickly in place, however we had a lot of trouble with how to use the color sensor correctly in the implementation. Fortunately we found this example which was a lot of help.

After a minor change to the code it worked very well as can be seen from the video clip below or here.

Conclusion

As can be seen in the video clip the robot work nicely but we haven’t implemented using a PID control as we get a RGB vector or a color ID back from the sensor and the easiest way for us to implement it was the color id.

The class code is available here.

Status

We had some trouble with tweaking the PID controller correctly and therefore we need figure out how to get that done correctly. This is very important for the next weeks Segway robot which is an inverted pendulum.

References

[1] A PID Controller For Lego Mindstorms Robots